Introduction: Why Thermal Modeling Needs a Better Feedback Loop

Thermal behavior is one of the hardest parts of engineering systems to model accurately. Heat evolves over time, depends on geometry and materials, and often requires expensive simulations to predict correctly. Traditional physics-based models are accurate, but slow. Purely data-driven models are fast, but often brittle and hard to trust.

In recent years, a new class of approaches has started to bridge that gap: physics-informed machine learning. Among them, Thermal Neural Networks stand out as a practical way to combine first-principles thermal modeling with the flexibility of neural networks.

In this post, we explore how Thermal Neural Networks work, why they matter for engineers, and what they enable in real-world applications, drawing on insights from our recent podcast conversation with Tobias Moroder, Data Scientist, and Georg Goeppert, Systems Engineer at Schaeffler.

The Limits of Traditional Thermal Modeling

Thermal systems are often modeled using lumped-parameter approaches, finite-element simulations, or simplified equivalent circuits. These methods work well when the system is well understood and time is available to tune parameters, but they quickly become bottlenecks in modern engineering workflows.

As systems grow more complex, thermal models become:

- Harder to parameterize accurately

- Computationally expensive to simulate

- Difficult to adapt when operating conditions change

At the same time, purely data-driven approaches - such as black-box neural networks - introduce their own problems. They may fit training data extremely well but often violate physical laws, behave unpredictably outside the training range, or fail silently in safety-critical scenarios.

Engineers are left choosing between interpretability and flexibility, or speed and trustworthiness.

What Are Thermal Neural Networks?

Thermal Neural Networks sit between these two extremes.

Instead of learning temperature behavior from scratch, TNNs embed physical structure directly into the neural network architecture. The model is designed to mirror how heat actually flows through a system (respecting conservation laws, energy balances, and thermal resistances) while still learning unknown parameters from data.

In practice, this often means representing a thermal system as a network of nodes and connections, where:

- Nodes correspond to physical components or temperature states

- Edges represent heat transfer paths

- Neural network components learn parameters such as conductances or heat capacities

This approach combines the reliability of physics-based thermal models with the adaptability of machine learning. The model retains a physically meaningful structure, but learns from data over time, making it both interpretable and responsive to real-world variability.

Combining Thermal Physics with Data-Driven Learning

The key insight behind thermal neural networks is that structure matters more than raw data volume.

Because the model already “knows” how heat should behave, it requires far less data than a fully black-box neural network. At the same time, it can handle nonlinearities, parameter drift, and unmodeled dynamics that would be painful to capture manually.

This leads to several practical advantages:

- Data efficiency: Useful models can be trained with limited experimental data

- Physical plausibility: Predictions remain consistent with thermodynamic principles

- Generalization: Models extrapolate more reliably to new operating conditions

- Interpretability: Engineers can inspect learned parameters and relate them back to physical quantities

For teams working in regulated or safety-critical domains, this combination is especially compelling.

From Offline Models to Digital Twins

One of the most compelling applications of thermal neural networks is their role in enabling truly adaptive digital twins.

Traditional digital twins are often built on fixed, physics-based thermal models. While these models can be highly accurate at deployment, they tend to drift over time as systems age, components degrade, or operating conditions change. Updating them usually requires manual recalibration or even rebuilding the model, an expensive and time-consuming process.

Thermal neural networks take a different approach. Because they combine a physics-based structure with data-driven learning, they can continuously absorb new measurement data while preserving their underlying thermal topology. This allows the digital twin to evolve alongside the physical system it represents.

In practice, this means engineers can maintain real-time visibility into thermal states, anticipate failures as heat paths change, and deploy control strategies that adapt to current operating conditions rather than historical assumptions. Instead of periodically resetting the model, the digital twin becomes a living representation - refined incrementally as new data arrives, without sacrificing physical interpretability.

Training Thermal Neural Networks in Practice

From a workflow perspective, training a thermal neural network will feel familiar to anyone with experience in system identification or machine learning, but the similarities stop at the surface. Instead of treating the system as a black box and letting a generic network infer relationships from raw inputs and outputs, the engineer starts by encoding what is already known about the physics.

The thermal structure of the system is defined up front: how components exchange heat, which paths dominate conduction or convection, and which relationships are well understood versus uncertain. Based on this structure, the engineer makes deliberate choices about what the model is allowed to learn and what should remain fixed according to physical laws. Data is then used to tune only the unknown or variable parameters, refining the model rather than replacing the physics that underpin it.

This fundamentally changes the role of data in the modeling process. Instead of asking the network to discover physics implicitly, data becomes a way to calibrate and adapt a physically meaningful model. As a result, the engineer’s effort shifts away from extensive feature engineering and toward model structuring, deciding how the system should be represented and where learning adds the most value. For many engineering teams, this aligns far better with existing expertise and leads to models that are not only accurate, but also interpretable, maintainable, and trustworthy over time.

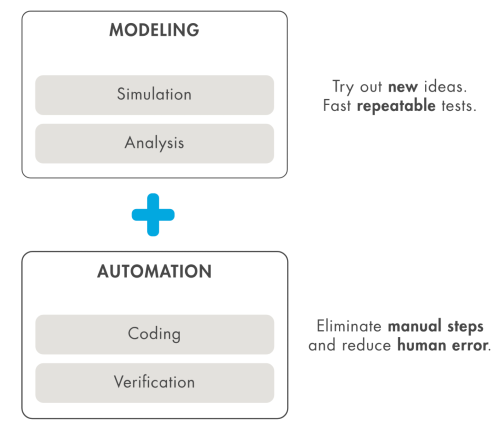

Why Hybrid Thermal Models Change the Engineering Tradeoff

For engineers working with real thermal systems, thermal neural networks are not about chasing the latest AI trend. They address a very practical problem: how to reduce the time and effort spent maintaining accurate thermal models without giving up physical rigor.

Traditional workflows often force teams into repeated cycles of hand-tuning parameters, stabilizing purely data-driven models, or rebuilding simulations as operating conditions drift over time. Hybrid thermal models change that equation. By embedding physical structure directly into the learning process, thermal neural networks remain numerically stable and interpretable, even as they adapt continuously to new data. Instead of fighting model degradation, engineers gain confidence that predictions remain physically meaningful throughout the system’s lifecycle.

This shift has implications beyond individual productivity. For engineering leaders and technical decision-makers, thermal neural networks represent a reallocation of modeling effort rather than an entirely new toolchain. Teams no longer need to choose between simulation-heavy approaches that are costly to maintain and black-box machine learning pipelines that are difficult to trust or audit. A hybrid approach scales more naturally across product variants, integrates cleanly with existing simulation environments, and supports long-term maintainability and traceability.

The net result is a modeling workflow that moves faster without becoming brittle. Development cycles shorten, system monitoring becomes more adaptive, and products in the field remain resilient as real-world conditions change. In that sense, thermal neural networks are less about automation and more about restoring balance, combining data-driven adaptability with the physical foundations that engineering decisions still depend on.

Conclusion: A Pragmatic Path for Engineering AI

Thermal neural networks fundamentally change the engineering tradeoff between speed and rigor. For practicing engineers, they are not about chasing the latest AI trend, but about compressing the modeling workflow without sacrificing trust. By embedding physical structure directly into the learning process, these hybrid models reduce the time spent hand-tuning thermal parameters, stabilizing black-box models, or rebuilding simulations as operating conditions evolve. At the same time, they remain numerically stable and interpretable, giving engineers confidence that predictions stay physically meaningful even as models adapt to new data.

For engineering leaders and technical decision-makers, this represents a broader shift in how modeling effort is allocated. Rather than choosing between simulation-heavy workflows and purely data-driven pipelines, teams can adopt hybrid approaches that scale across products, integrate naturally with existing simulation tools, and remain maintainable over the long term. The result is faster iteration cycles, more reliable digital twins, and systems that continue to deliver value well beyond initial deployment.

Seen in a wider context, thermal neural networks are part of a growing movement toward physics-informed and structure-aware machine learning. Similar ideas are emerging in fluid dynamics, structural mechanics, and battery modeling, all pointing to the same conclusion: the most effective engineering AI systems don’t replace physics, they operationalize it. As tools mature and workflows become more accessible, these hybrid models are likely to move from niche techniques to default choices in engineering practice.

Thermal neural networks are still evolving, but their direction is clear. By blending physical insight with learning capability, they offer a pragmatic path forward for engineers and organizations that want better models, faster feedback, and greater resilience - without abandoning the principles that make engineering reliable in the first place.

Go Deeper

If you’re interested in how hybrid modeling and AI-assisted workflows are being integrated into real engineering tools, MathWorks has published several practical resources worth exploring:

- Thermal Neural Networks - Podcast with Tobias Moroder & Georg Goeppert: https://www.youtube.com/watch?v=ySRjX9GUiCA

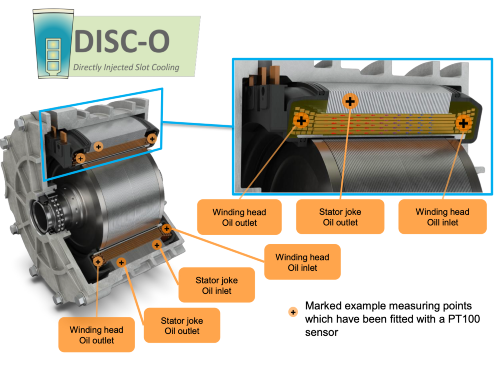

- Thermal Neural Network for Temperature Modeling in E-Motors: https://www.mathworks.com/content/dam/mathworks/mathworks-dot-com/company/events/conferences/matlab-expo-online/2025/ww-expo-2025-schaeffler-temperature-modeling.pdf

- MATLAB Code Generation with the Help of an MCP Server: https://blogs.mathworks.com/community/2025/06/17/matlab-code-generation-with-the-help-of-an-mcp-server/