A Turning Point for Multiphysics Design

Engineering teams across industries face a quiet but persistent bottleneck: simulations are powerful, yet slow, and - more importantly - only scratch the surface of what’s possible in a design space. In a recent deep-dive session, Quanscient’s founders presented the philosophy, technical workflow, and real-world applications behind MultiphysicsAI.

For decades, simulation has been used mainly as a validation tool rather than an exploration engine. According to Quanscient’s founders, less than 1% of any design space is typically explored, leaving vast innovation potential untouched.

Today, a fundamentally different approach is emerging. One that blends cloud-native multiphysics simulation with AI-driven inference to unlock thousands of design variations, uncover hidden trade-offs, and accelerate product development far beyond traditional limits.

This new paradigm is MultiphysicsAI - and in this blog post, we’ll explore how it works, why it matters, and how its capabilities unfold through real engineering case studies.

For a broader technical perspective, you can also revisit our previous podcast episode discussing Quanscient’s approach in detail.

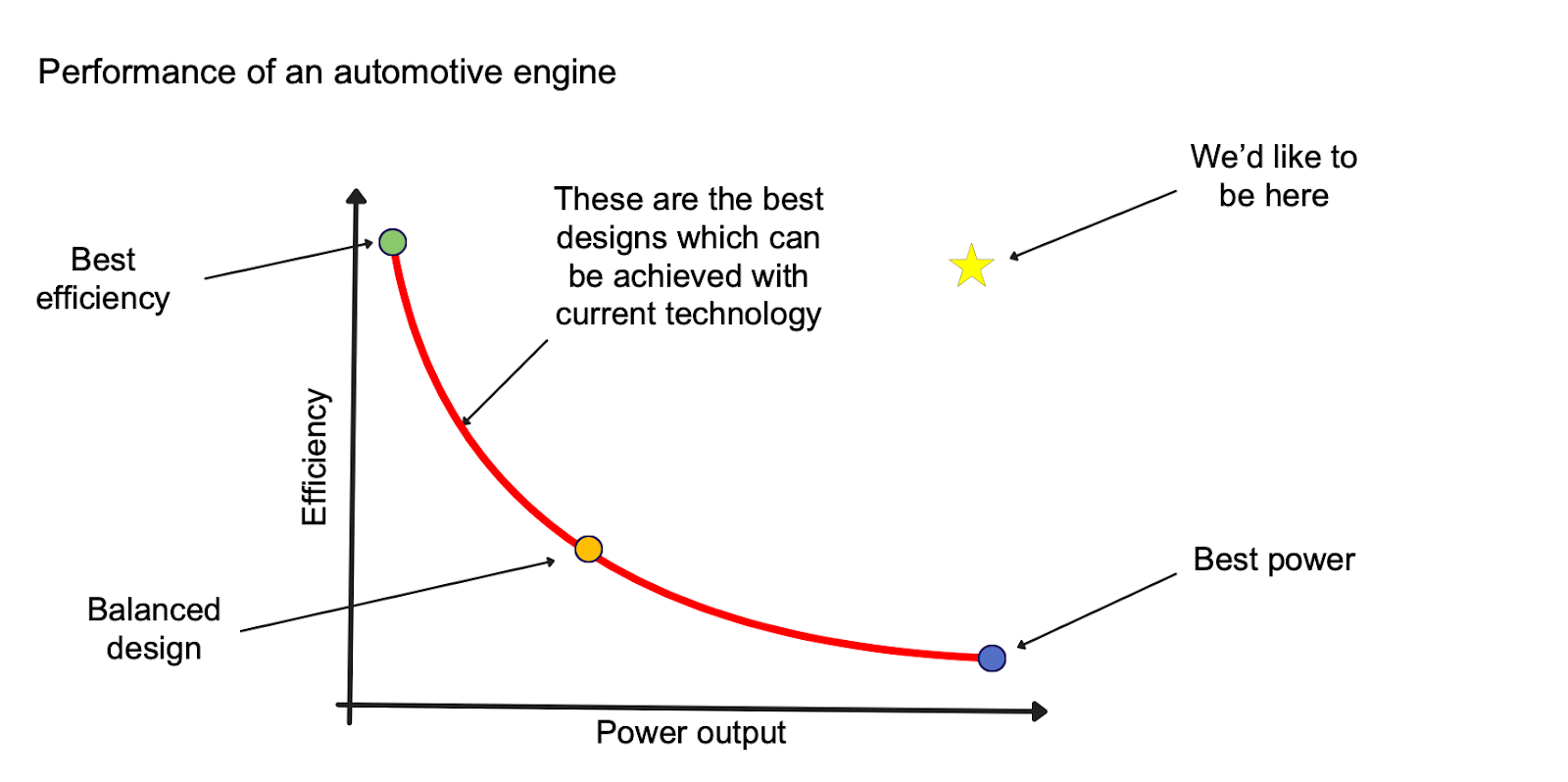

Why Engineering Needs a New Approach

Traditionally, engineers design a device, run a handful of simulations, tweak the geometry, rerun the model, and inch their way toward an acceptable solution. This iterative loop works, but it’s slow and risks missing better-performing designs entirely.

As Quanscient’s CEO, and Co-founder Juha Riipi explains, the constraint has never been imagination - it's been compute, bandwidth, and tool limitations. Engineers simply couldn’t run the thousands of simulations required to explore a large design space.

However, three shifts have converged:

- Cloud-scale multiphysics solvers capable of massively parallel simulation

- High-quality, strongly coupled physics data generated at scale

- AI models that can learn from these datasets and infer outcomes in milliseconds

Together, they enable something radically new: AI-assisted exploration of an entire design space, not just a sliver of it.

From Simulation as Validation to Simulation as Discovery

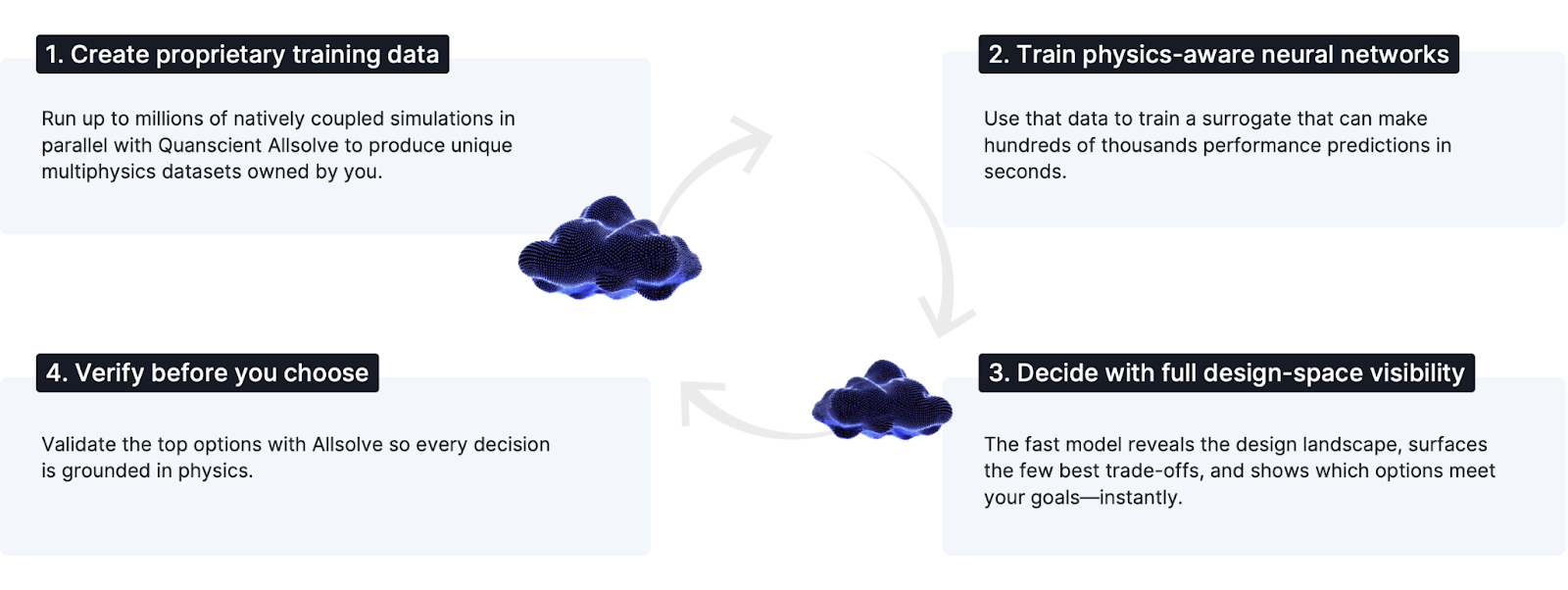

At the core of MultiphysicsAI is a simple but powerful idea:

Use simulation to generate high-fidelity data, then train AI models to explore the design space thousands of times faster.

Quanscient’s Allsolve generates large simulation datasets - often tens of thousands of samples - in hours rather than weeks, thanks to scalable cloud compute. Then, neural-network surrogate models learn the relationships between inputs (geometry, material properties, operating conditions) and outputs (KPIs, fields, spectra).

Once trained, these surrogate models run hundreds of thousands of inferences per second, enabling real-time:

- Multidimensional design optimization

- Trade-off exploration

- Robustness and yield analysis

- Inverse problem solving

- Rapid design iteration

And crucially, every promising candidate can still be verified at the end with deterministic FEM simulations, closing the loop between AI speed and physics accuracy. Taken together, the simulation-AI feedback loop follows a clear sequence. This entire workflow can be summarized in four steps:

Now that the core workflow is clear, the next step is to see how it behaves with real devices and real constraints. The following use cases show how MultiphysicsAI accelerates and elevates actual engineering problems.

Use Case 1: Optimizing a Piezoelectric Micromachined Ultrasonic Transducer (PMUT)

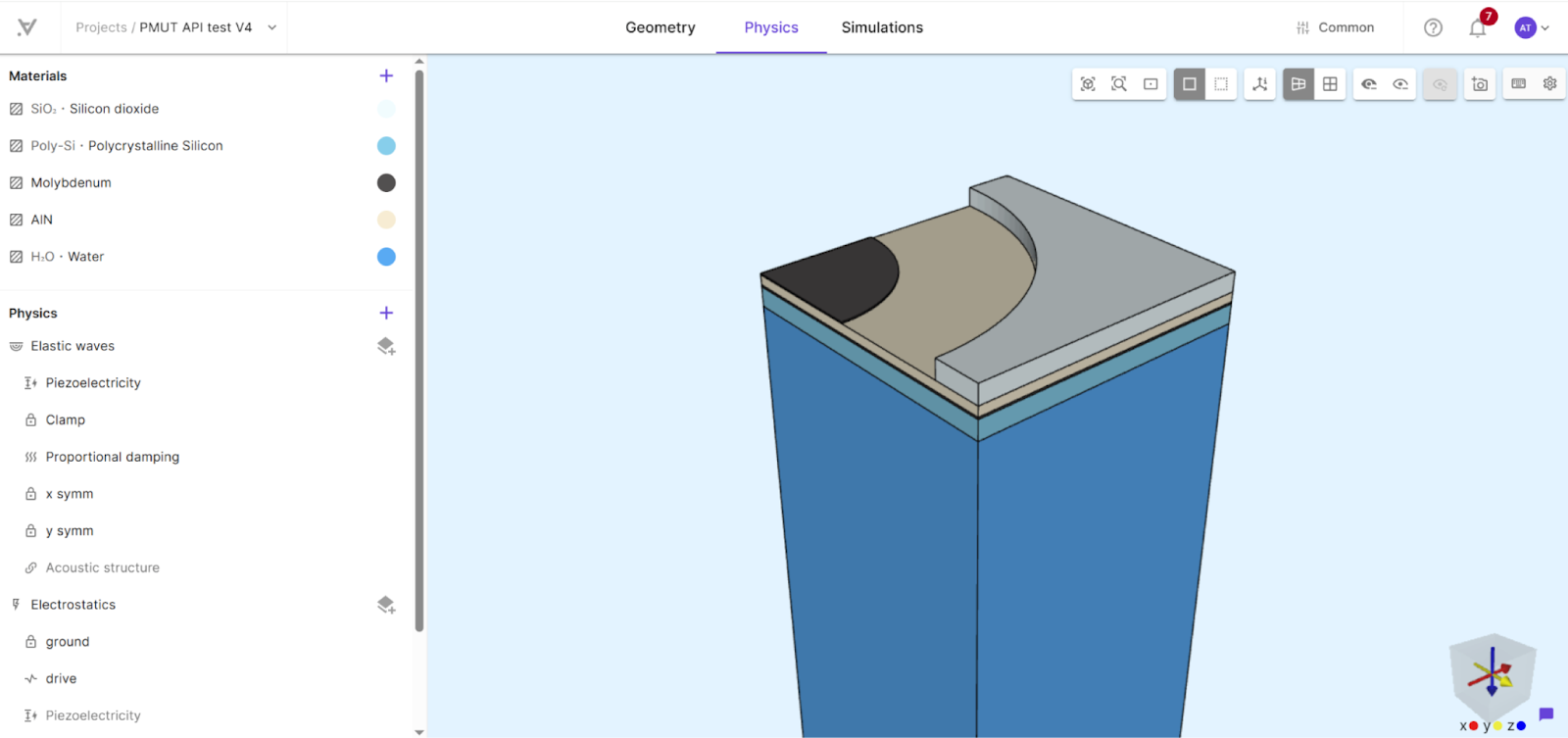

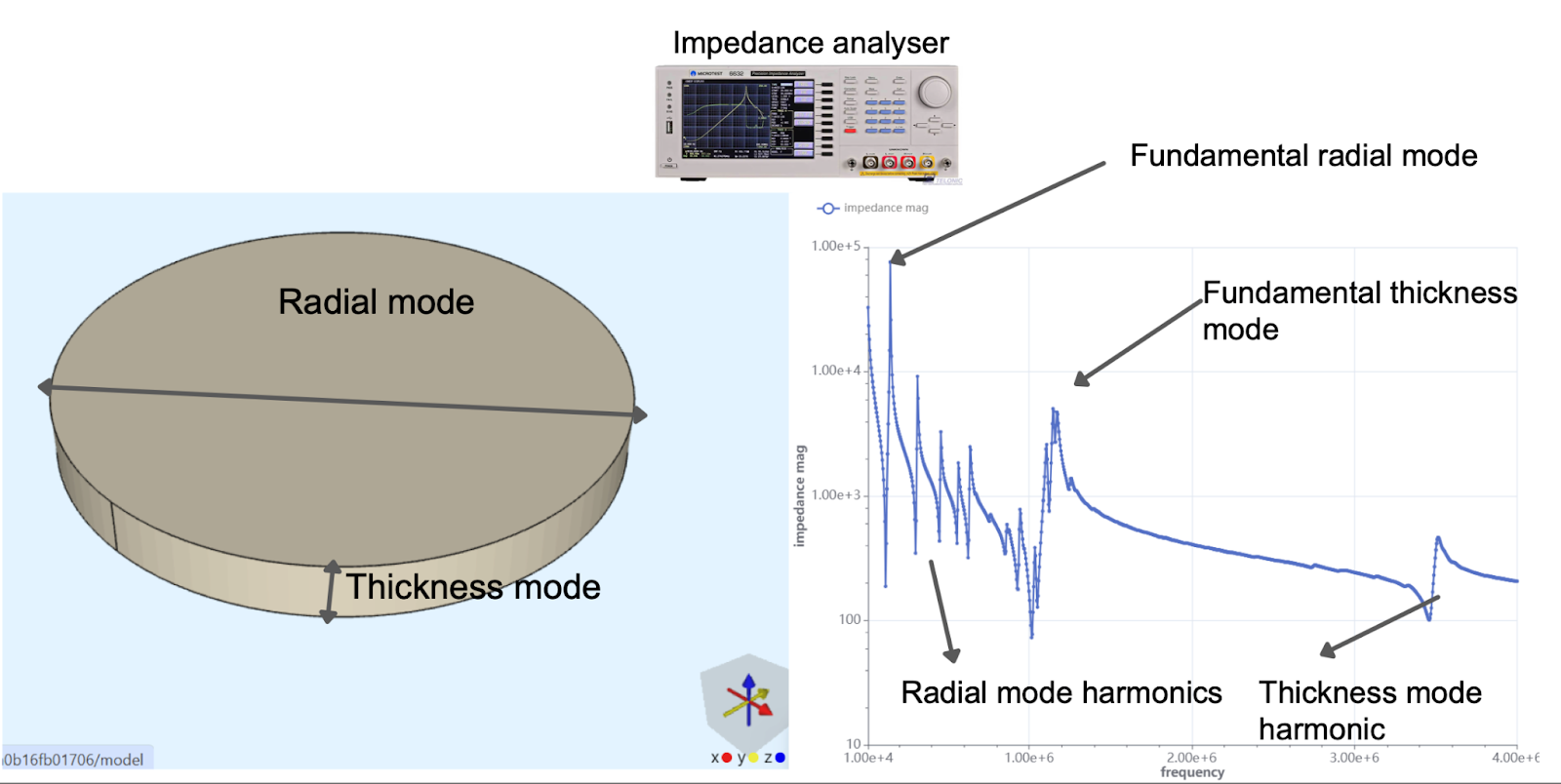

A circular PMUT was modeled using coupled piezoelectric–structural–acoustic FEM physics, with quarter-symmetry applied to keep computations efficient. The simulation was solved in the time domain, and the resulting waveforms were transformed into the frequency domain to compute key performance metrics such as transmit sensitivity and electrical impedance.

To illustrate how MultiphysicsAI accelerates this type of design work, the team generated a dataset of 10,000 PMUT simulations, each representing a unique combination of geometric parameters. A single simulation took roughly five seconds to run - and because thousands executed in parallel, the full dataset was produced in minutes rather than weeks. From this data, an AI surrogate model was trained to predict the PMUT’s main performance indicators - sensitivity, bandwidth, and center frequency - directly from geometry, almost instantly.

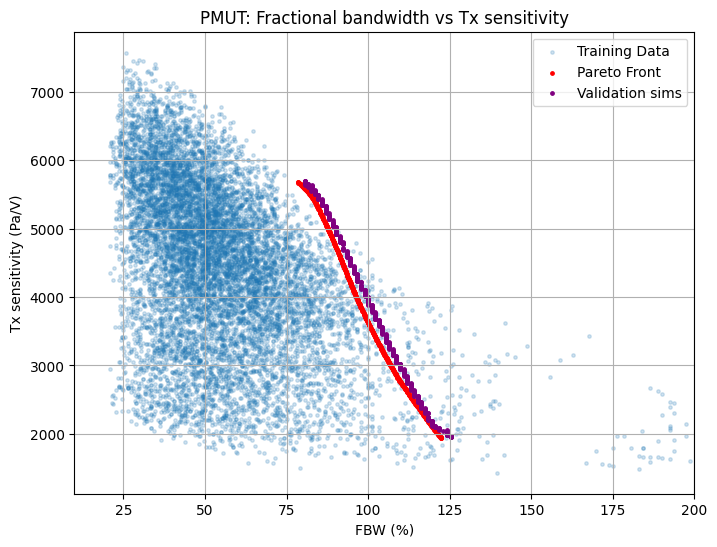

Using this surrogate, the team then carried out Pareto front analysis in real time. Traditional approaches would require days or even weeks of FEM computations to explore this trade-off space. Here, it took seconds.

The result was striking:

- The AI uncovered high-performing designs far outside the cluster of original training points.

- The best candidates were validated via Allsolve to ensure physical correctness.

- Designers could apply constraints (e.g., center frequency = 12 MHz) and instantly generate a new, compliant Pareto front.

The chart below visualizes the PMUT design space and the AI-generated Pareto front.

This transforms design meetings: instead of arguing blindly about “what might work”, engineers can navigate a landscape of optimal solutions and make informed decisions quickly.

Use Case 2: MEMS Microspeaker - Balancing Loudness and Distortion

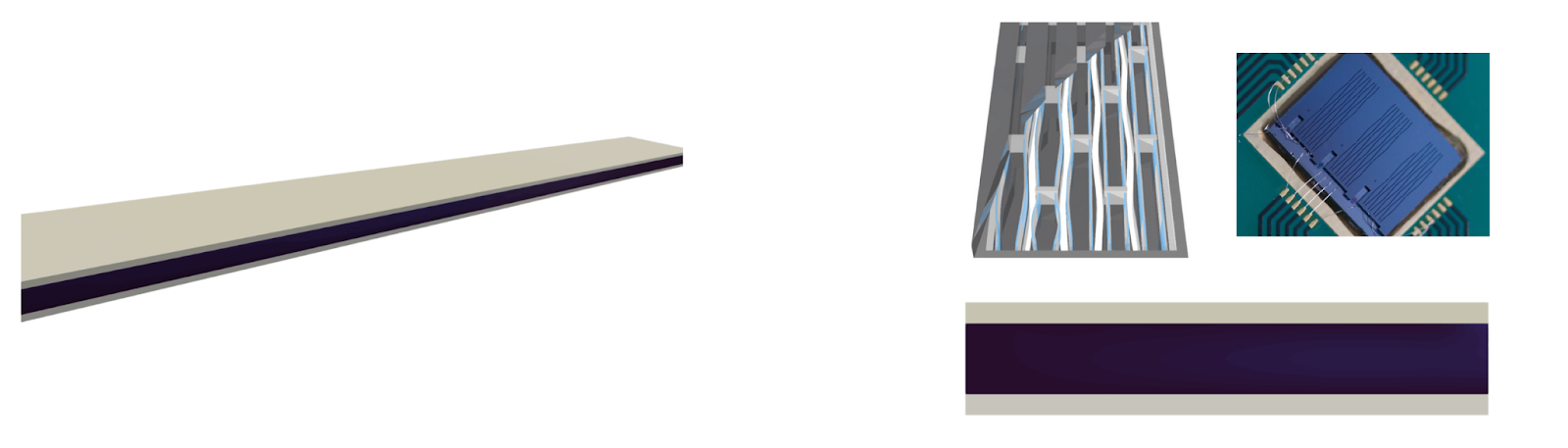

The next example focused on a MEMS electrostatic microspeaker, a device in which the engineering challenge lies in finding the right balance between loudness and audio quality. In practical terms, that meant pushing the sound pressure level as high as possible while simultaneously keeping total harmonic distortion to a minimum.

Again, AI surrogate models generated in seconds a Pareto front identifying superior design options. One candidate showed a 30% improvement in SPL while keeping distortion in check. But this example introduced an even more powerful capability:

AI for design robustness and manufacturing yield analysis.

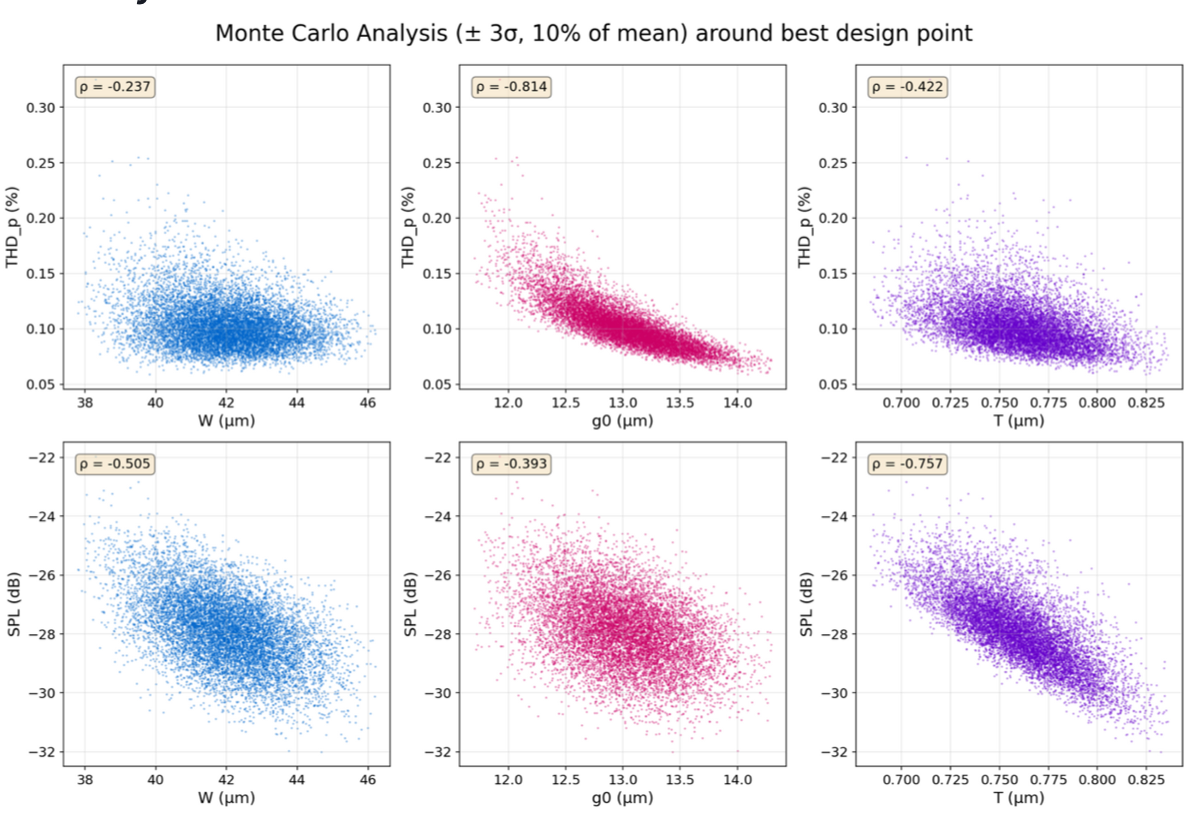

Using the surrogate model, engineers randomly varied geometric parameters within realistic manufacturing tolerances and generated:

- Distribution maps of KPI variation

- Pass/fail plots

- Yield predictions for different tolerance scenarios

What used to require hundreds - or thousands - of costly FEM evaluations could now be done in seconds, enabling manufacturing engineers to explore:

- Where tolerances are too tight

- Where additional margin is unnecessary

- How design choices impact yield and cost

The visualizations below show how the surrogate model predicts performance variation under realistic manufacturing tolerances, revealing how SPL and THD shift across thousands of randomized design samples.

This collapses the gap between design and manufacturing, letting teams optimize for real-world manufacturability rather than idealized performance.

Use Case 3: Solving the Inverse Problem - Piezo Material Characterization

Piezoelectric materials are notoriously difficult to characterize. A single material may involve 12+ unknown parameters, entangled across multiple vibration modes. Experimental impedance spectra are dense, complex, and hard to interpret.

Using Allsolve, the team generated 10,000 simulated impedance spectra, each with randomized material constants and disk dimensions.

They then trained an AI model to do the inverse:

Given an impedance spectrum → predict the stiffness matrix, piezoelectric coupling constants, and permittivity matrix.

The results were clear. The AI model achieved error rates below 0.25% and delivered material characterization far more quickly than traditional approaches. This capability is now being developed into a new Allsolve beta feature, allowing users to upload impedance measurements and receive AI-derived material parameters in return. A process that has long been known for its difficulty becomes both more accessible and more accurate.

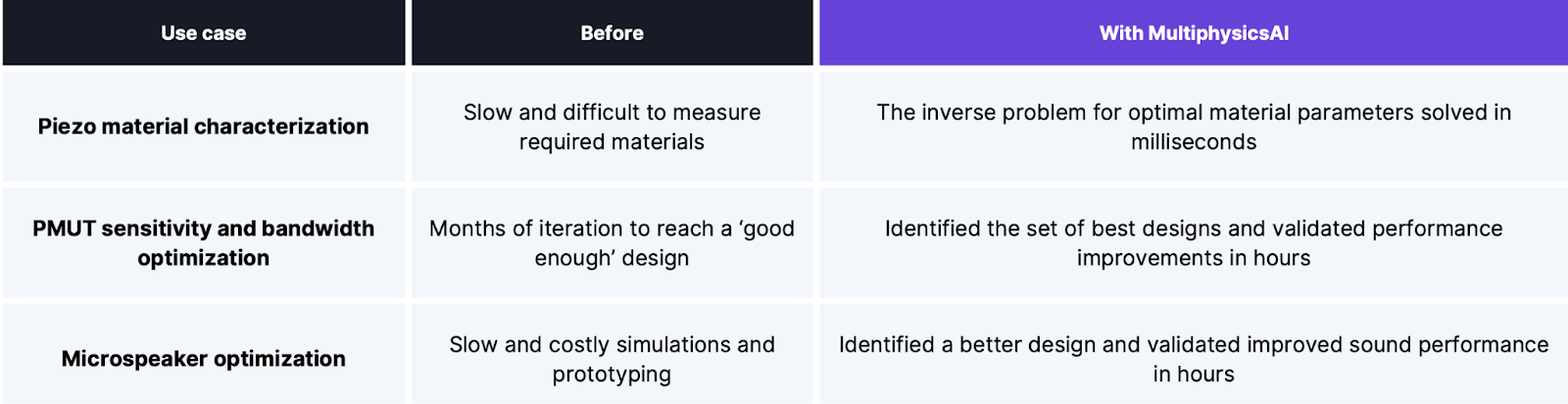

When viewed together, these use cases show how dramatically the workflow improves speed, accuracy, and decision-making across domains.

Why Cloud-Native Simulation Infrastructure Matters

MultiphysicsAI is possible because Allsolve brings together a set of capabilities rarely found in a single simulation platform. It supports truly strongly coupled multiphysics (piezoelectric, thermal, acoustic, structural, electromagnetic), allowing engineers to model real devices without simplifying away the physics that matter. It runs massively in parallel across thousands of CPUs, enabling even complex models to be executed at scale. Being entirely cloud-native, the platform allows engineers to instantly commence large-scale studies without the need for software installation, cluster configuration, or hardware management. Through its Python SDK, Allsolve also slots naturally into modern engineering workflows, making it easy to launch large simulation sweeps and connect them directly to AI training pipelines.

For engineers, this combination turns Allsolve from a traditional solver into a data engine - one that can generate the high-fidelity, domain-specific datasets that physics-aware AI models require. Unlike language models trained on public text, engineering AI relies on proprietary, application-specific data, and the ability to produce that data on demand is what makes MultiphysicsAI so powerful in practical design work.

Toward Fully Intelligent Engineering Workflows

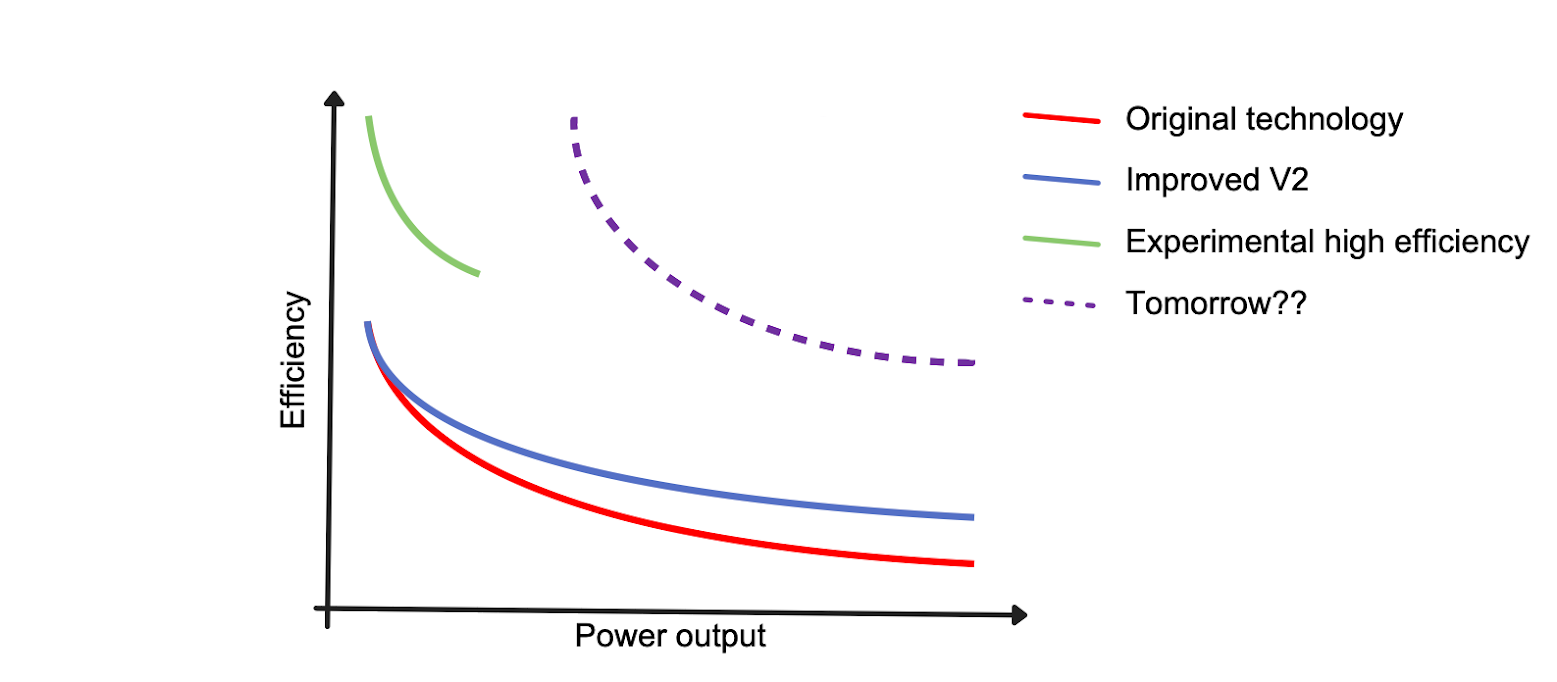

We are entering an era where simulation becomes generative, AI becomes physics-aware, and engineering teams gain access to tools that uncover possibilities far beyond human iteration alone.

MultiphysicsAI shows how cloud-scale simulation and intelligent surrogates can transform product development, from MEMS to piezo materials to complex multiphysics systems. The result is faster innovation, richer exploration, and more confident decisions.

This new paradigm doesn’t simply change the mechanics of running simulations - it expands what engineers can envision and achieve. The long-term vision is clear:

A unified environment where simulation, AI, design optimization, and manufacturability analysis live side by side - and feed into each other.

If you’d like to explore the full technical walkthrough behind this shift, you can dive deeper into the complete session here.